Software Performance: A Statistical Lens

Introduction

Software performance is typically measured along two primary dimensions:

Throughput (operations per unit of time)

Latency (time taken to complete a single operation).

Benchmarks for these figures are typically measured as average values taken over a long sequence of repetitions of operations. However, in practice these values are actually distributions of values drawn from a statistical distribution.

For low latency systems like storage software, the tail of the latency distribution is often as important as the average latency. Replicated storage systems perform writes invoking RPCs against multiple storage nodes, and large reads + reconstructions fanning out to a significant number of storage nodes. In these cases high tail latencies have a significant impact on overall operation latency. In this series of notes, I’ll explore the types of optimizations I’ve used or have seen to optimize for low latency. These techniques are all applicable to any high performance, low latency service.

To begin, I’ll start with a more theoretical overview of how individual operations contribute to the statistical execution time distributions. Major sources of latency and variance are outlined, while later notes discuss some ways software can address these issues.

Preliminaries

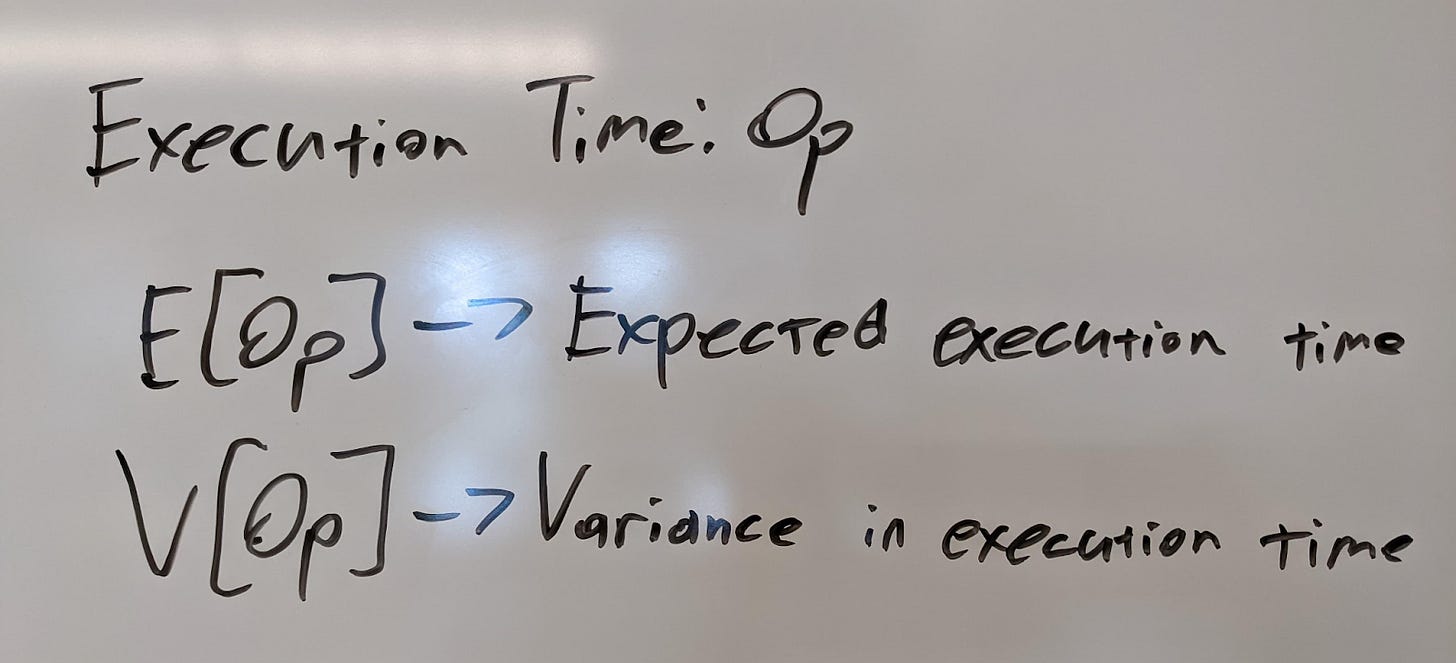

At the lowest levels of software, individual CPU operations like memory accesses, conditional branches and locks do not have deterministic execution times. Instead, they each have an execution time distribution (outlined below) based on various factors such as CPU branch prediction accuracy, memory locality, and lock contention (among many other factors). This distribution can be characterized by an expected execution time and variance:

Consider each program or higher level operation (such as a malloc()/free() call) as a sequence of multiple such operations:

As a first order approximation, lets assume independence of execution time of individual operations (which is a stretch given CPU caches and the resulting correlation between operations due to memory locality, but let’s handwave here), then the expected execution time and variance of a higher level operation such as an allocation/API call is described as follows:

Under the independence assumption, estimate the expected execution time and variance for a higher level operation as the sums of the expected execution times and variances of the individual sub-operations (memory accesses, branches, and locks).

Soft Realtime

So why does all of this talk of latency distributions even matter after all? In computing one particular subset of systems include so called soft and hard realtime systems. Hard realtime systems have absolute deadlines to complete an operation. Think of flight control software on aircraft. Each iteration of the software control loop must complete within a fixed timeslice or the correctness of the system is violated.

Soft realtime systems are a subset of hard realtime, where bounded latency of operations is highly desired, but correct operation of the system does not depend on it. A database system where each query a customer submits has a latency SLA is a good example. More pertinently, a read or write to storage systems has a certain SLA on completion time in the form of a customer timeout. Requests must be completed within a given time limit to be useful to our customers, but correctness is not violated if the time limit is exceeded.

In this light, modeling execution time distributions becomes critically important to meeting the low latency targets which storage service customers depend on. Next let’s build an understanding about the characteristics of the execution time distribution of the fundamental operations our software is composed from.

Execution Distributions

To make this abstract discussion more concrete, let’s consider a sequence of common operations and their notional execution time distributions.

Memory Access

Starting from first principles, consider the execution time of a single memory access, in the below example a pointer dereference:

void dereference(T* ptr) {

auto value = ptr->field;

// ...

}Modern CPUs have multiple levels of cache, with varying hit rates and access times at each level of cache. Thus the distribution of execution times for a single memory access is multi-modal, with exact probabilities based on cache hit rates and access latencies of each level of cache + DRAM:

The most likely result is a fast L1 cache hit, followed by subsequent peaks for L2, and L3 cache misses. Followed by DRAM access if all cache misses. Beyond the simple model here, additional latency/variance could be introduced by TLB misses, or page faults (discussed later). Point being, even a simple operation like dereferencing a pointer inherently contributes some amount of variance to the overall execution time.

Branches

Modern CPUs have multi stage execution pipelines, with many different instructions in various stages of completion at any point in time. The outcome of conditional branch instructions is determined much later in the pipeline than where the next instruction is fetched. Thus, the CPU must predict the outcome and target of each branch and speculatively continue execution based on the predicted outcome and branch target.

If the prediction is correct, then execution continues efficiently with the instructions which already began execution speculatively. But the prediction is incorrect, the pipelines must be flushed, the speculatively executed instructions discarded, and the correct branch target taken instead. A nominal distribution of execution times can be seen below:

Exact performance depends on the accuracy of the branch predictor, which is typically in the range of 95%+ on average in typical workloads, and the length of the pipeline. Longer CPU pipelines lead to worse misprediction penalties as more in flight instructions must be discarded.

Locks

Locks are among the worst contributors to execution time variance. Consider the following notional distribution for lock acquisition:

A few takeaways/characteristics for the latency distribution:

It’s unbounded, for multiple reasons

Deadlock is always possible

The lock holder thread can be preempted by a kernel worker or another thread preventing the thread for acquiring the lock

The lock holder can be unexpectedly blocked by:

An interrupt from a NIC or storage device

A page fault

A TLB miss

Even the uncontended case is expensive if the mutex was last touched by another core and the cache line must be migrated to the current core (future note will discuss this).

Points 1.2 and 1.3 are especially relevant for client side code. For environments where the service owner has absolute control of thread affinity, interrupt pinning, and the OS scheduling policy, preemption of lock holders can be reduced or perhaps eliminated (a later note explores system level optimizations for low latency).

That is absolutely not the case when a client library is embedded into an application. The operating environment can not be controlled at all, and all bets are off on lock holder preemption. For these reasons, locks are fundamentally incompatible with soft realtime code. At best, applications can use try_lock() and bail out to another path or retry later if possible, rather than blocking the user space thread. The resulting context switch is pretty painful due to the second order impacts it can have (cache line eviction of hot data associated with the current IO, for example).

Memory Allocation

Next up, memory allocation. While many modern allocators like tcmalloc or jemalloc have high performance in most cases, that’s not always the case. For example, one highlight of JEMalloc is it employs a multi-tier allocation strategy:

Small allocations are first attempted against a very fast lockless per-thread tcache

Allocations which miss the tcache (allocation size is too large, or the tcache is empty) then hit a set of shared arenas

These arenas are protected by locks, each arena protected from concurrent access by its own lock

When allocations miss the arena (arenas run out of free memory, or extremely large allocations), then call mmap() or equivalent for the host OS

In Linux, this means a system call + mmap() updates to the process level memory mappings protected by a single process scoped lock

Due to the hierarchy of allocation strategies, jemalloc performance can be highly variable. The vast majority of the time small allocations hit the tcache without locks or such. Misses on the tcache cost significantly more, and arena misses are catastrophic to execution time variance. The above chart shows the notional distribution of allocation times. This does make the optimistic assumption that lock hold time is bounded. Technically this isn’t the case, so this is the best case scenario. Given the presence of locks in memory allocations, traditional memory allocators are not suitable for soft realtime systems due to their impact on tail latency.

An additional complication comes from cross thread allocate-free pairs. The allocation may come from one threads tcache, but the free on a different thread must return back to a shared arena vs. lockless tcache. On multiple occasions, I’ve seen significant CPU cost (easily 2-3%) on calls to free() because cheap tcache hits in malloc() on one thread become expensive arena accesses on a different free()ing thread.

Pervasiveness Of Allocations

Allocations are incredibly prevalent in many C++ applications. Most allocations are hidden behind classes which use dynamic memory allocation out of site of the developer. Some examples of hidden allocations:

Strings

Every* std::string is a memory allocation (* = except for very small strings which benefit from the Small String Optimization and fit inline)

class MyClass {

public:

// String copy requires allocation due to copy constructor

// allocating memory for the field_ std::string

explicit MyClass(const std::string& str) : field_(str) {}

private:

std::string field_;

};Futures/Coroutines

Modern C++ code increasingly uses Futures or coroutines for asynchronous code. However, each future/coroutine is a memory allocation. Worse yet, each continuation attached to a future is at least one more allocation as the continuation itself returns a future and associated allocation. For example, the following example below performs at least 3-4 allocations.

folly::Future<int> asyncApi() {

// ...

}

// Each folly::Future<>/folly::Promise<> pair requires an

// allocation. Each .thenTry()/via() and such call creates

// a new future and thus a new allocation. This example is

// 3-4 allocations hidden within the future chaining

asyncApi().thenTry([] (folly::Try<int> res) {

return processResult(res);

}).via(someExecutor).thenTry([](folly::Try<int> res) {

return processResultInOtherExecutor(res);

);Each call to the following folly::Future APIs performs an allocation:

folly::Future::then()folly::Future::thenValue()folly::Future::thenTry()folly::Future::via()... and every other

folly::FutureAPI which creates a newfolly::Futureobject

std::function

std::function must dynamically allocate a buffer to store the underlying functor/callable object. Both folly::Future and std::function do have inline storage (48 bytes for folly::Future and 16 bytes for most std::function implementations). As long as the functor/callable object size is under those limits, dynamic allocation can be elided. However, callable objects/lambdas larger than those limits trigger a heap allocation.

Container Reallocation

Mutations to common container types (std::vector, std::unordered_map) can trigger allocation.

std::unordered_map<int, int> map;

auto key = getKey();

auto value = getValue();

// Triggers a re-hash of the table, everything is reallocated and moved

map[key] = value;Each of these allocations has the potential to miss the tcache or even allocator arenas and suffer increased variance when locks are taken on the shared allocator arenas or even system calls to fetch free memory from the operating system.

System Level Variance

Most of what we’ve discussed so far was focused on the operations that compose the software we write ourselves. However, there are many other sources of variance in software systems. Some of the others occur at the host/system level, from the operating system and hardware abstractions in use.

Context switches

Threads are given the illusion of continuous sequential execution on a CPU. However, in practice Linux and modern operating systems will multiplex M runnable threads on N CPU cores. If the number of runnable threads M exceed the number of CPU cores N, those threads will periodically be context switched out, leading to 100s of microseconds or even 10s of milliseconds worth of stalls

System calls

System calls trash the cache on the core running the thread executing the system call, hampering performance on return from the system call due to application code and data being evicted from the cache

Depending on the call, the system call itself likely takes locks inside the kernel as part of it’s execution, further increasing variance

Interrupts

High performance NICs will spit out millions of interrupts per second to handle packet processing. Each of those interrupts will momentarily steal the CPU core from the running thread on that core for some period of time. The now interrupted thread will be stalled, and memory accesses on behalf of the interrupt handler will evict parts of the L1/L2 caches which were in use by the application thread.

TLB misses

The very act of loading a memory location can trigger a Translation Lookaside Buffer miss when performing the virtual → physical memory address translation. TLB misses trigger page table walks in the CPU virtual memory hardware which performs expensive operations to determine the correct virtual → physical mapping and install it in the processor’s TLB

Page faults

Any memory access can trigger a page fault, which come in two varieties, soft and hard

Hard page faults occur for pages that are off on disk and need to be read in from swap

Soft page faults occur for pages that are not (yet) mapped to a physical address, but which do not require a read from backing memory (such as the first time an anonymous page is accessed).

In a later note we’ll cover each of these sources of variance in depth

Variance = Tail Latency

Going back to our original premise of thinking about storage software as a soft realtime system. The statistical lens here highlights both average execution time and variance as considerations when designing and optimizing software. One key point is tail latency and execution time variance are equivalent. When considering operation execution time as a distribution, we want to reduce variance to bring down the aggregate tail latency.

If we think of the total application execution time as the sum of each operation, then reducing the # of operations (complexity of the software) and the amount of variance of each operation (choosing operations with low, bounded latency) will contribute to lower tail latency. In some cases, it’s even worth trading average case latency in exchange for bounds on tail latency (eg. simple data structures that do not require memory allocation but have slower average time lookups).

Takeaways

From the above discussion, let’s derive some high level take-aways

Benchmarks lie, report average time, not variance

Benchmarks nearly always offer the average/expected performance, and tell us nothing about the variance nor worst case execution times

Example: A cache in front of an ACL checker may give 25 nanosecond lookup times for cache hits 99% of the time, but the 1% of misses trigger a network call that takes 1000000 nanoseconds. Average performance might be on the order of 1000 nanoseconds, but it obscures the very high variance

Corollary: Nearly any benchmark of a cache backed API will be wildly inaccurate unless the hit/miss rate expected in production has been carefully simulated. Naive benchmarks of cached APIs are always wrong because they only fetch a small set of values and have a 100% hit rate.

Variance == Tail Latency

We speak of SLAs (Service Level Agreements) in terms of tail latency, and variance is synonymous with tail latency

Many storage system SLAs are defined in terms of tail latency (P90 or P99), thus variance is the enemy of latency SLAs

Average Case vs. Worst Case tradeoffs

Many data structures offer a tradeoff between lower average case execution time with higher variance

Example: Insertions into a hash table, inserts are normally very very fast, until a rehash occurs, memory allocations kick in, and worst case latency increases. Many operations we typically consider as O(1) are actually only amortized O(1) not absolute

Caches always trade off average case for worst case

Locking and cross thread coordination are among the worst contributors to variance, and thus tail latency

Locking, and by extension memory allocations, are some of the most costly operations in terms of added variance in a system, and are totally incompatible with low latency, soft realtime systems like storage systems, databases and operating systems

Per the charts above, JEMalloc and other modern allocations are highly optimized and incredibly fast...most of the time

Locks are particularly nasty, as they do not have bounded latency due to preemption and potential deadlocks/livelocks

System level variance/jitter is also very significant

Addressing system level variance is a major topic to be addressed in a later note

This whole series looks great. Thank you for putting it together. I'd love to learn this stuff when I can find some time.

I noticed you haven't got a lot of feedback and you haven't written for a while. I wanted to encourage you to keep writing as long as feel you have more to say.