Software Performance: Cache Locality And Allocation

Introduction

In our first note we discussed the tail latency ↔ variance equivalence. We also outlined a simplistic model to decompose software into a sequence of operations, each such operation has an execution time distribution characterized by both an expected execution time + variance. The execution time for software then becomes a linear combination of expected execution times and variances of the sub-operations. Based on this knowledge, we understand there are two ways to reduce tail latency:

Fewer operations in total that contribute to variance (lower complexity of the software)

Eliminate the highest variance operations in the software/system

Storage software I’ve worked on aggressively applies both approaches, keeping operations as simple as possible. Application code elsewhere appears short and concise, but this is misleading because such application code frequently calls into heavy weight abstractions which obfuscate their true cost.

Cache Misses/Locality

The first line of optimizations to achieve soft realtime performance centers on reducing cache misses. Most cache optimizations are based on improving cache locality by reducing cache misses. There are three primary categories for a cache miss:

Capacity misses

The cache is too small to hold all the needed data at once, useful data has to be evicted too early before the application is done using it

Locality misses

A cache line is accessed for the first time, or after a significant delay, and the cache does not have a copy of the cache line holding the desired data

Conflict misses

Internally caches have limited structure that can trigger misses due to multiple cache lines aliasing to the same cache sets, a very specific subset of capacity misses, and outside the scope of our work here

Memory access patterns will affect these different miss types. Random access patterns (following pointers to random memory locations) tend to result in locality misses, as the desired memory location is not yet in cache. Streaming sequential access patterns tend to lead to capacity misses by forcing useful data out of the cache as a large number of cache lines are accessed over a short duration. One caveat to streaming access patterns though is that CPUs attempt to optimize them by pre-fetching data in advance. Modern CPUs are very good at prefetching sequential access streams.

With preliminaries out of the way, let’s look at some optimizations. These mostly come down to putting commonly used data close together, or keeping the size of structs and data lower.

Field Inlining

Avoid storing a pointer if not needed, and keep struct fields inline if possible. A pointer dereference is likely to be a cache miss unless that object was recently accessed. For example, consider the following code:

struct MyStruct {

// Unique ownership, not shared with others

std::unique_ptr<SubStructA> a;

std::unique_ptr<SubStructB> b;

std::unique_ptr<SubStructC> c;

};

std::unique_ptr<MyStruct> foo;

/**

* Likely four cache misses:

* 1. Pointer dereference for *foo is a cache miss

* 2. Pointer dereference for a->fieldA is a cache miss

* 3. Pointer dereference for b->fieldB is a cache miss

* 4. Pointer dereference for c->fieldC is a cache miss

*/

callFunc(foo->a->fieldA, foo->b->fieldB, foo->c->fieldC);

// Instead, keep inline

struct MyStruct {

SubStructA a;

SubStructB b;

SubStructC c;

};

MyStruct foo;

/**

* Assuming foo is on the stack and in cache, 0 cache misses

*/

callFunc(foo.a.fieldA, foo.b.fieldB, foo.c.fieldC);While inlining struct fields does increase the containing struct’s size, it does eliminate a pointer dereference that is very likely to be a cache miss. As a rule of thumb, assume that any pointer dereference is likely to trigger a cache miss, unless the memory referenced by the pointer was very recently accessed.

Alignment + Padding

When C/C++ compilers lay out a struct in memory, certain alignment rules must be followed: https://en.wikipedia.org/wiki/Data_structure_alignment. The impact is that the struct fields are not necessarily contiguous in memory, the compiler will insert padding between fields and structs with incompatible alignment. One useful tool to understand padding within a struct is pahole (https://linux.die.net/man/1/pahole).

Excessive padding bloats the struct size and can push it across multiple cache lines. Splitting across multiple cache lines can trigger capacity evictions to make room for extra (unneeded) space for the struct, evicting other useful data. It also means additional cache misses take place that could have been avoided if the struct was fit into a smaller amount of space and thus fewer cache lines.

struct MyStruct {

// 8 byte alignment

uint64_t bigInteger;

// 4 byte alignment

uint32_t smallInteger;

// <---- compiler inserts 4 bytes of padding to

// preserve 8 byte alignment for the next field

// 8 byte alignment

std::string someString;

// 1 byte alignment

bool flagA, flagB;

// <---- Compiler inserts 6 bytes of padding to

// preserve 8 byte alignment for the next field

// 8 byte alignment

std::unique_ptr<SubStruct> ptr;

};

// sizeof(MyStruct) == 40, alignof(MyStruct) == 8Padding can be eliminated by reordering the fields. Typically ordering the fields in either strictly increasing or decreasing order of the size/alignment requirement of each field will eliminate unneeded padding:

struct MyStruct {

// 8 byte alignment

uint64_t bigInteger;

// 8 byte alignment

std::string someString;

// 8 byte alignment

std::unique_ptr<SubStruct> ptr;

// 4 byte alignment

uint32_t smallInteger;

// 1 byte alignment

bool flagA, flagB;

};

// sizeof(MyStruct) == 30, alignof(MyStruct) == 8Clustering Fields

Sometimes certain struct fields are accessed on the request processing hot path, while other fields are rarely, if ever, accessed on the hot path. Placing the hottest fields used in the critical path close together at the start of the struct, and putting colder fields towards the end optimizes by generating a correlation between access times of the hot fields. Example:

// Before, fields are mixed through the struct,

// different cache lines

struct CommandContext {

uint32_t hotField;

void* coldField;

uint32_t* anotherColdField;

// ... more cold fields

uint8_t coldUUID[16];

uint32_t anotherHotField;

};

// After, keep hottest fields at start of struct

struct CommandContext {

uint32_t hotField;

uint32_t anotherHotField;

void* coldField;

uint32_t* anotherColdField;

// ... more cold fields

uint8_t coldUUID[16];

};The hottest fields at the start are loading into the cache on the first access to the hot fields. All subsequent accesses to those fields will hit the cache. The cold fields at the end do not occupy space in the leading cache line where the hot fields are located, so more room is available in the cache line for the most important and frequently accessed fields.

Bitpacking Structs

C/C++ support bitfields (https://en.cppreference.com/w/cpp/language/bit_field) to pack sub 8-bit integral values into a larger integral type. Bitpacking can significantly reduce the struct size, saving space and shrinking a struct from multiple cache lines to instead fit into a single cache line.

// Bad example

struct MyStruct {

// 1 byte each

bool A;

bool B;

bool C;

bool D;

// Only takes values in the range 0-3

enum class MyEnum : uint8_t {

kFirst = 0,

kSecond = 1,

kThird = 2,

kFourth = 3

} enumeration;

};

// sizeof(MyStruct) == 5

// Initialization, five 1 byte writes

MyStruct myStruct;

myStruct.A = false;

myStruct.B = false;

myStruct.C = false;

myStruct.D = false;

myStruct.enumeration = MyStruct::MyEnum::kFirst;

// Good example

struct MySmallerStruct {

union {

struct {

uint8_t A : 1,

B : 1,

C : 1,

D : 1,

enumeration : 4

}

uint8_t state;

}

};

// sizeof(MySmallerStruct) == 1

// Initialization, single 1 byte write

MySmallerStruct mySmallerStruct;

struct.state = 0;Intrusive Data Structures

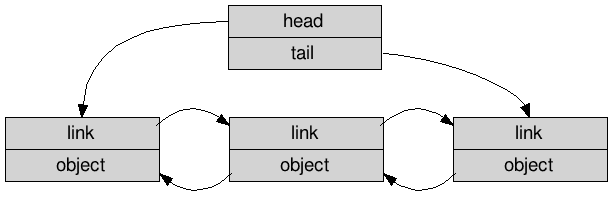

Intrusive data structures are a very under appreciated topic. Traditionally linked data structures store the underlying data item separately from the metadata with the pointers. Instead, high performance software aggressively uses intrusive lists. In particular, one option is to use boost::intrusive: https://www.boost.org/doc/libs/1_79_0/doc/html/intrusive/intrusive_vs_nontrusive.html.

In the above diagram representing a traditional linked list, each separate gray box is a separate memory allocation, almost certainly stored in different cache lines. Thus, accessing each object requires two cache misses, one to load the link, and a second to load the object itself. Instead, intrusive data structures embed the links into the struct itself. The following diagram demonstrates this:

Each object traversal only triggers a single cache miss, to fetch both the link metadata + the object itself in a single access.

Cache Local Data Structures

Much attention on data structures is heavily focused on the Big-O complexity. Unfortunately, there are often large constant factors obscured behind the Big-O data structure and algorithm complexity. Also, for small-ish data structures, caching can often have a bigger impact than sophisticated lookup algorithms. For example, storing a map as a sorted list of std::pair<>s in a std::vector<> (folly::sorted_vector_map, effectively) can be faster than std::unordered_map if the number of elements is small enough to fit in a handful of cache lines. Because the elements are contiguous, and the CPU prefetches cache lines in advance, a quick scan or binary search in the sorted vector could be faster than a std::unordered_map which triggers multiple cache misses. YMMV and benchmark first, as always.

Avoiding Allocations

From the first note in the series, memory allocations can heavily impact tail latencies. Avoiding or limiting dynamic memory allocation is thus one of the top optimizations used in storage systems and other soft realtime code (databases, operating systems, etc) to keep tail latencies in check.

Preallocation

Preallocate up front to avoid smaller allocations later, use fixed size structs to trade memory for CPU cost. One example is in work batching, pre-allocate everything up from in a fixed size buffer, and use a sub-set of that buffer if needed:

struct WorkBatch {

uint32_t numItemsInBatch;

/**

* Yes this takes many cache lines, but access pattern is sequential

* so the CPU cache prefetcher kicks in and automatically fetches

* future items in advance for us so it's not as bad

*/

std::array<WorkItem, kMaxBatchSize> items;

};

void processBatch(WorkBatch* batch) {

for (uint32_t idx = 0; idx < batch->numItemsInBatch; idx++) {

processItem(&batch->items[idx]);

}

}If the APIs in use require standard container classes like std::vector, pre-allocate capacity outside the critical path, and then fill it later inside the hot path, example:

// Outside the critical path

struct WorkBatch {

WorkBatch() {

items.reserve(kMaxExpectedItems);

}

std::vector<WorkItems> items;

}

WorkBatch batch;

// Inside the critical path

void buildBatch(WorkBatch* batch) {

for (uint16_t idx = 0; idx < neededItems; idx++) {

// batch->items already has pre-allocated capacity, no reallocations here

batch->items.push_back(createWorkItem());

}

}Inline Storage Optimizations

Some common data structures which perform dynamic optimizations also have a small size optimization to store small amounts of data inline to avoid dynamic allocation in certain cases.

folly::small_vector<T, K>→ Stores up to sizeof(T) * K bytes inline, spills to dynamically allocated memory otherwisefolly::Function→ Stores functor objects 6 * 8 = 48 bytes or smaller inlinestd::function→ Stores functor objects 2 * 8 = 16 bytes or smaller inlinefolly::fbstring→ Stores up to 24 bytes inlinestd::string→ Stores up to 15 bytes inline using Small String Optimization (SSO) in most implementations

The folly::Function and std::function examples are especially pertinent. Many times lambda functions are implicitly converted into either type. Keeping lambda capture lists short can be the difference between inline storage vs. dynamic allocation.

Avoiding Locks

As mentioned in earlier notes, real-time software very very aggressively avoids taking locks.

Conditional Locking

One approach to avoid blocking on locks is conditionally acquiring the lock and rely on a fallback path

if (lock.try_lock()) {

// Perform work while protected by lock

} else {

// Enqueue on an intrusive list to retry later or use alternative API

}A good example of conditional locking is found in a notional allocator cache:

SocketEntry* socketEntry = getSocketEntryForThread(threadIdx);

if (LIKELY(socketEntry != nullptr)) {

std::unique_lock<std::mutex> lock(socketEntry->mutex, std::defer_lock);

if (LIKELY(lock.try_lock() && socketEntry->head != nullptr)) {

threadEntry.stats.freeListHits++;

header = socketEntry->head;

socketEntry->head = socketEntry->head->next;

}

}

// Slowest path, allocate from the allocator directly, lots of contention and

// potentially syscalls

if (UNLIKELY(header == nullptr)) {

threadEntry.stats.allocatorHits++;

auto allocationSize = contextSize_ + sizeof(CacheHeader);

header = (CacheHeader*)(memoryAllocator_->allocForThread(allocationSize, threadIdx));

}Doing this in practice is rather tricky, as the algorithms must be re-designed to handle conditional locking. For example, code can put operations back onto a waiting list, and retry acquiring the lock later rather than blocking the main thread. This increases average execution time, while avoiding unbounded blocking calls to acquire the lock to lower variance.

Per-Thread/Core Data

Best way to avoid locking is to simply avoid sharing data structures across threads. Much realtime software has a strong convention to never share state across threads (with rare exceptions like lockless queues to pass requests/responses). No shared state → No locking. Keeping per-thread data has the additional benefit of reduced bugs from race conditions. Single-threaded code is always simpler and easier to reason about than multi-threaded code.

Tradeoffs

Some optimizations may conflict with one another. For example, preallocation and inlining structs tend to run against keeping structs small for cache locality. When considering conflicts between optimizations, the key is to eliminate the most expensive operation. Suffering an extra cache miss or two due to an inlined struct or array is dramatically better than hitting a lock on memory allocation. Key to making these tradeoffs is understanding the costs of different operations, and their potential variance. Below is a slide from a famous presentation Jeff Dean gave:

While these numbers have shifted since the talk was given back in 2010, knowing the approximate order of magnitude costs is important when weighing different tradeoffs.